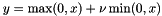

Rectified Linear Unit non-linearity  . The simple max is fast to compute, and the function does not saturate.

More...

. The simple max is fast to compute, and the function does not saturate.

More...

#include <relu_layer.hpp>

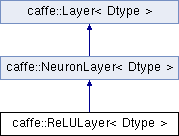

Inheritance diagram for caffe::ReLULayer< Dtype >:

Public Member Functions | |

| ReLULayer (const LayerParameter ¶m) | |

| virtual const char * | type () const |

| Returns the layer type. | |

Public Member Functions inherited from caffe::NeuronLayer< Dtype > Public Member Functions inherited from caffe::NeuronLayer< Dtype > | |

| NeuronLayer (const LayerParameter ¶m) | |

| virtual void | Reshape (const vector< Blob< Dtype > *> &bottom, const vector< Blob< Dtype > *> &top) |

| Adjust the shapes of top blobs and internal buffers to accommodate the shapes of the bottom blobs. More... | |

| virtual int | ExactNumBottomBlobs () const |

| Returns the exact number of bottom blobs required by the layer, or -1 if no exact number is required. More... | |

| virtual int | ExactNumTopBlobs () const |

| Returns the exact number of top blobs required by the layer, or -1 if no exact number is required. More... | |

Public Member Functions inherited from caffe::Layer< Dtype > Public Member Functions inherited from caffe::Layer< Dtype > | |

| Layer (const LayerParameter ¶m) | |

| void | SetUp (const vector< Blob< Dtype > *> &bottom, const vector< Blob< Dtype > *> &top) |

| Implements common layer setup functionality. More... | |

| virtual void | LayerSetUp (const vector< Blob< Dtype > *> &bottom, const vector< Blob< Dtype > *> &top) |

| Does layer-specific setup: your layer should implement this function as well as Reshape. More... | |

| Dtype | Forward (const vector< Blob< Dtype > *> &bottom, const vector< Blob< Dtype > *> &top) |

| Given the bottom blobs, compute the top blobs and the loss. More... | |

| void | Backward (const vector< Blob< Dtype > *> &top, const vector< bool > &propagate_down, const vector< Blob< Dtype > *> &bottom) |

| Given the top blob error gradients, compute the bottom blob error gradients. More... | |

| vector< shared_ptr< Blob< Dtype > > > & | blobs () |

| Returns the vector of learnable parameter blobs. | |

| const LayerParameter & | layer_param () const |

| Returns the layer parameter. | |

| virtual void | ToProto (LayerParameter *param, bool write_diff=false) |

| Writes the layer parameter to a protocol buffer. | |

| Dtype | loss (const int top_index) const |

| Returns the scalar loss associated with a top blob at a given index. | |

| void | set_loss (const int top_index, const Dtype value) |

| Sets the loss associated with a top blob at a given index. | |

| virtual int | MinBottomBlobs () const |

| Returns the minimum number of bottom blobs required by the layer, or -1 if no minimum number is required. More... | |

| virtual int | MaxBottomBlobs () const |

| Returns the maximum number of bottom blobs required by the layer, or -1 if no maximum number is required. More... | |

| virtual int | MinTopBlobs () const |

| Returns the minimum number of top blobs required by the layer, or -1 if no minimum number is required. More... | |

| virtual int | MaxTopBlobs () const |

| Returns the maximum number of top blobs required by the layer, or -1 if no maximum number is required. More... | |

| virtual bool | EqualNumBottomTopBlobs () const |

| Returns true if the layer requires an equal number of bottom and top blobs. More... | |

| virtual bool | AutoTopBlobs () const |

| Return whether "anonymous" top blobs are created automatically by the layer. More... | |

| virtual bool | AllowForceBackward (const int bottom_index) const |

| Return whether to allow force_backward for a given bottom blob index. More... | |

| bool | param_propagate_down (const int param_id) |

| Specifies whether the layer should compute gradients w.r.t. a parameter at a particular index given by param_id. More... | |

| void | set_param_propagate_down (const int param_id, const bool value) |

| Sets whether the layer should compute gradients w.r.t. a parameter at a particular index given by param_id. | |

Protected Member Functions | |

| virtual void | Forward_cpu (const vector< Blob< Dtype > *> &bottom, const vector< Blob< Dtype > *> &top) |

| virtual void | Forward_gpu (const vector< Blob< Dtype > *> &bottom, const vector< Blob< Dtype > *> &top) |

| Using the GPU device, compute the layer output. Fall back to Forward_cpu() if unavailable. | |

| virtual void | Backward_cpu (const vector< Blob< Dtype > *> &top, const vector< bool > &propagate_down, const vector< Blob< Dtype > *> &bottom) |

| Computes the error gradient w.r.t. the ReLU inputs. More... | |

| virtual void | Backward_gpu (const vector< Blob< Dtype > *> &top, const vector< bool > &propagate_down, const vector< Blob< Dtype > *> &bottom) |

| Using the GPU device, compute the gradients for any parameters and for the bottom blobs if propagate_down is true. Fall back to Backward_cpu() if unavailable. | |

Protected Member Functions inherited from caffe::Layer< Dtype > Protected Member Functions inherited from caffe::Layer< Dtype > | |

| virtual void | CheckBlobCounts (const vector< Blob< Dtype > *> &bottom, const vector< Blob< Dtype > *> &top) |

| void | SetLossWeights (const vector< Blob< Dtype > *> &top) |

Additional Inherited Members | |

Protected Attributes inherited from caffe::Layer< Dtype > Protected Attributes inherited from caffe::Layer< Dtype > | |

| LayerParameter | layer_param_ |

| Phase | phase_ |

| vector< shared_ptr< Blob< Dtype > > > | blobs_ |

| vector< bool > | param_propagate_down_ |

| vector< Dtype > | loss_ |

Detailed Description

template<typename Dtype>

class caffe::ReLULayer< Dtype >

Rectified Linear Unit non-linearity  . The simple max is fast to compute, and the function does not saturate.

. The simple max is fast to compute, and the function does not saturate.

Constructor & Destructor Documentation

◆ ReLULayer()

template<typename Dtype >

|

inlineexplicit |

- Parameters

-

param provides ReLUParameter relu_param, with ReLULayer options: - negative_slope (optional, default 0). the value

by which negative values are multiplied.

by which negative values are multiplied.

- negative_slope (optional, default 0). the value

Member Function Documentation

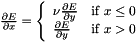

◆ Backward_cpu()

template<typename Dtype >

|

protectedvirtual |

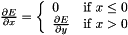

Computes the error gradient w.r.t. the ReLU inputs.

- Parameters

-

top output Blob vector (length 1), providing the error gradient with respect to the outputs  containing error gradients

containing error gradients  with respect to computed outputs

with respect to computed outputs

propagate_down see Layer::Backward. bottom input Blob vector (length 1)  the inputs

the inputs  ; Backward fills their diff with gradients

; Backward fills their diff with gradients  if propagate_down[0], by default. If a non-zero negative_slope

if propagate_down[0], by default. If a non-zero negative_slope  is provided, the computed gradients are

is provided, the computed gradients are  .

.

Implements caffe::Layer< Dtype >.

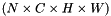

◆ Forward_cpu()

template<typename Dtype >

|

protectedvirtual |

- Parameters

-

bottom input Blob vector (length 1)  the inputs

the inputs

top output Blob vector (length 1)  the computed outputs

the computed outputs  by default. If a non-zero negative_slope

by default. If a non-zero negative_slope  is provided, the computed outputs are

is provided, the computed outputs are  .

.

Implements caffe::Layer< Dtype >.

The documentation for this class was generated from the following files:

- include/caffe/layers/relu_layer.hpp

- src/caffe/layers/relu_layer.cpp

1.8.13

1.8.13