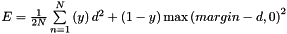

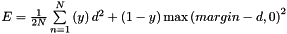

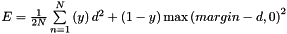

Computes the contrastive loss  where

where  . This can be used to train siamese networks.

More...

. This can be used to train siamese networks.

More...

#include <contrastive_loss_layer.hpp>

Public Member Functions | |

| ContrastiveLossLayer (const LayerParameter ¶m) | |

| virtual void | LayerSetUp (const vector< Blob< Dtype > *> &bottom, const vector< Blob< Dtype > *> &top) |

| Does layer-specific setup: your layer should implement this function as well as Reshape. More... | |

| virtual int | ExactNumBottomBlobs () const |

| Returns the exact number of bottom blobs required by the layer, or -1 if no exact number is required. More... | |

| virtual const char * | type () const |

| Returns the layer type. | |

| virtual bool | AllowForceBackward (const int bottom_index) const |

Public Member Functions inherited from caffe::LossLayer< Dtype > Public Member Functions inherited from caffe::LossLayer< Dtype > | |

| LossLayer (const LayerParameter ¶m) | |

| virtual void | Reshape (const vector< Blob< Dtype > *> &bottom, const vector< Blob< Dtype > *> &top) |

| Adjust the shapes of top blobs and internal buffers to accommodate the shapes of the bottom blobs. More... | |

| virtual bool | AutoTopBlobs () const |

| For convenience and backwards compatibility, instruct the Net to automatically allocate a single top Blob for LossLayers, into which they output their singleton loss, (even if the user didn't specify one in the prototxt, etc.). | |

| virtual int | ExactNumTopBlobs () const |

| Returns the exact number of top blobs required by the layer, or -1 if no exact number is required. More... | |

Public Member Functions inherited from caffe::Layer< Dtype > Public Member Functions inherited from caffe::Layer< Dtype > | |

| Layer (const LayerParameter ¶m) | |

| void | SetUp (const vector< Blob< Dtype > *> &bottom, const vector< Blob< Dtype > *> &top) |

| Implements common layer setup functionality. More... | |

| Dtype | Forward (const vector< Blob< Dtype > *> &bottom, const vector< Blob< Dtype > *> &top) |

| Given the bottom blobs, compute the top blobs and the loss. More... | |

| void | Backward (const vector< Blob< Dtype > *> &top, const vector< bool > &propagate_down, const vector< Blob< Dtype > *> &bottom) |

| Given the top blob error gradients, compute the bottom blob error gradients. More... | |

| vector< shared_ptr< Blob< Dtype > > > & | blobs () |

| Returns the vector of learnable parameter blobs. | |

| const LayerParameter & | layer_param () const |

| Returns the layer parameter. | |

| virtual void | ToProto (LayerParameter *param, bool write_diff=false) |

| Writes the layer parameter to a protocol buffer. | |

| Dtype | loss (const int top_index) const |

| Returns the scalar loss associated with a top blob at a given index. | |

| void | set_loss (const int top_index, const Dtype value) |

| Sets the loss associated with a top blob at a given index. | |

| virtual int | MinBottomBlobs () const |

| Returns the minimum number of bottom blobs required by the layer, or -1 if no minimum number is required. More... | |

| virtual int | MaxBottomBlobs () const |

| Returns the maximum number of bottom blobs required by the layer, or -1 if no maximum number is required. More... | |

| virtual int | MinTopBlobs () const |

| Returns the minimum number of top blobs required by the layer, or -1 if no minimum number is required. More... | |

| virtual int | MaxTopBlobs () const |

| Returns the maximum number of top blobs required by the layer, or -1 if no maximum number is required. More... | |

| virtual bool | EqualNumBottomTopBlobs () const |

| Returns true if the layer requires an equal number of bottom and top blobs. More... | |

| bool | param_propagate_down (const int param_id) |

| Specifies whether the layer should compute gradients w.r.t. a parameter at a particular index given by param_id. More... | |

| void | set_param_propagate_down (const int param_id, const bool value) |

| Sets whether the layer should compute gradients w.r.t. a parameter at a particular index given by param_id. | |

Protected Member Functions | |

| virtual void | Forward_cpu (const vector< Blob< Dtype > *> &bottom, const vector< Blob< Dtype > *> &top) |

Computes the contrastive loss  where where  . This can be used to train siamese networks. More... . This can be used to train siamese networks. More... | |

| virtual void | Forward_gpu (const vector< Blob< Dtype > *> &bottom, const vector< Blob< Dtype > *> &top) |

| Using the GPU device, compute the layer output. Fall back to Forward_cpu() if unavailable. | |

| virtual void | Backward_cpu (const vector< Blob< Dtype > *> &top, const vector< bool > &propagate_down, const vector< Blob< Dtype > *> &bottom) |

| Computes the Contrastive error gradient w.r.t. the inputs. More... | |

| virtual void | Backward_gpu (const vector< Blob< Dtype > *> &top, const vector< bool > &propagate_down, const vector< Blob< Dtype > *> &bottom) |

| Using the GPU device, compute the gradients for any parameters and for the bottom blobs if propagate_down is true. Fall back to Backward_cpu() if unavailable. | |

Protected Member Functions inherited from caffe::Layer< Dtype > Protected Member Functions inherited from caffe::Layer< Dtype > | |

| virtual void | CheckBlobCounts (const vector< Blob< Dtype > *> &bottom, const vector< Blob< Dtype > *> &top) |

| void | SetLossWeights (const vector< Blob< Dtype > *> &top) |

Protected Attributes | |

| Blob< Dtype > | diff_ |

| Blob< Dtype > | dist_sq_ |

| Blob< Dtype > | diff_sq_ |

| Blob< Dtype > | summer_vec_ |

Protected Attributes inherited from caffe::Layer< Dtype > Protected Attributes inherited from caffe::Layer< Dtype > | |

| LayerParameter | layer_param_ |

| Phase | phase_ |

| vector< shared_ptr< Blob< Dtype > > > | blobs_ |

| vector< bool > | param_propagate_down_ |

| vector< Dtype > | loss_ |

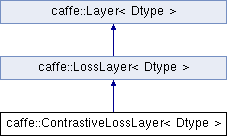

Detailed Description

template<typename Dtype>

class caffe::ContrastiveLossLayer< Dtype >

Computes the contrastive loss  where

where  . This can be used to train siamese networks.

. This can be used to train siamese networks.

Member Function Documentation

◆ AllowForceBackward()

|

inlinevirtual |

Unlike most loss layers, in the ContrastiveLossLayer we can backpropagate to the first two inputs.

Reimplemented from caffe::LossLayer< Dtype >.

◆ Backward_cpu()

|

protectedvirtual |

Computes the Contrastive error gradient w.r.t. the inputs.

Computes the gradients with respect to the two input vectors (bottom[0] and bottom[1]), but not the similarity label (bottom[2]).

- Parameters

-

top output Blob vector (length 1), providing the error gradient with respect to the outputs propagate_down see Layer::Backward. bottom input Blob vector (length 2)  the features

the features  ; Backward fills their diff with gradients if propagate_down[0]

; Backward fills their diff with gradients if propagate_down[0] the features

the features  ; Backward fills their diff with gradients if propagate_down[1]

; Backward fills their diff with gradients if propagate_down[1]

Implements caffe::Layer< Dtype >.

◆ ExactNumBottomBlobs()

|

inlinevirtual |

Returns the exact number of bottom blobs required by the layer, or -1 if no exact number is required.

This method should be overridden to return a non-negative value if your layer expects some exact number of bottom blobs.

Reimplemented from caffe::LossLayer< Dtype >.

◆ Forward_cpu()

|

protectedvirtual |

Computes the contrastive loss  where

where  . This can be used to train siamese networks.

. This can be used to train siamese networks.

- Parameters

-

bottom input Blob vector (length 3)  the features

the features ![$ a \in [-\infty, +\infty]$](form_54.png)

the features

the features ![$ b \in [-\infty, +\infty]$](form_55.png)

the binary similarity

the binary similarity ![$ s \in [0, 1]$](form_56.png)

top output Blob vector (length 1)  the computed contrastive loss:

the computed contrastive loss:  where

where  . This can be used to train siamese networks.

. This can be used to train siamese networks.

Implements caffe::Layer< Dtype >.

◆ LayerSetUp()

|

virtual |

Does layer-specific setup: your layer should implement this function as well as Reshape.

- Parameters

-

bottom the preshaped input blobs, whose data fields store the input data for this layer top the allocated but unshaped output blobs

This method should do one-time layer specific setup. This includes reading and processing relevent parameters from the layer_param_. Setting up the shapes of top blobs and internal buffers should be done in Reshape, which will be called before the forward pass to adjust the top blob sizes.

Reimplemented from caffe::LossLayer< Dtype >.

The documentation for this class was generated from the following files:

- include/caffe/layers/contrastive_loss_layer.hpp

- src/caffe/layers/contrastive_loss_layer.cpp

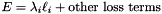

, as

, as  in the overall

in the overall  ; hence

; hence  . (*Assuming that this top

. (*Assuming that this top  1.8.13

1.8.13