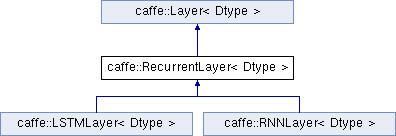

An abstract class for implementing recurrent behavior inside of an unrolled network. This Layer type cannot be instantiated – instead, you should use one of its implementations which defines the recurrent architecture, such as RNNLayer or LSTMLayer. More...

#include <recurrent_layer.hpp>

Public Member Functions | |

| RecurrentLayer (const LayerParameter ¶m) | |

| virtual void | LayerSetUp (const vector< Blob< Dtype > *> &bottom, const vector< Blob< Dtype > *> &top) |

| Does layer-specific setup: your layer should implement this function as well as Reshape. More... | |

| virtual void | Reshape (const vector< Blob< Dtype > *> &bottom, const vector< Blob< Dtype > *> &top) |

| Adjust the shapes of top blobs and internal buffers to accommodate the shapes of the bottom blobs. More... | |

| virtual void | Reset () |

| virtual const char * | type () const |

| Returns the layer type. | |

| virtual int | MinBottomBlobs () const |

| Returns the minimum number of bottom blobs required by the layer, or -1 if no minimum number is required. More... | |

| virtual int | MaxBottomBlobs () const |

| Returns the maximum number of bottom blobs required by the layer, or -1 if no maximum number is required. More... | |

| virtual int | ExactNumTopBlobs () const |

| Returns the exact number of top blobs required by the layer, or -1 if no exact number is required. More... | |

| virtual bool | AllowForceBackward (const int bottom_index) const |

| Return whether to allow force_backward for a given bottom blob index. More... | |

Public Member Functions inherited from caffe::Layer< Dtype > Public Member Functions inherited from caffe::Layer< Dtype > | |

| Layer (const LayerParameter ¶m) | |

| void | SetUp (const vector< Blob< Dtype > *> &bottom, const vector< Blob< Dtype > *> &top) |

| Implements common layer setup functionality. More... | |

| Dtype | Forward (const vector< Blob< Dtype > *> &bottom, const vector< Blob< Dtype > *> &top) |

| Given the bottom blobs, compute the top blobs and the loss. More... | |

| void | Backward (const vector< Blob< Dtype > *> &top, const vector< bool > &propagate_down, const vector< Blob< Dtype > *> &bottom) |

| Given the top blob error gradients, compute the bottom blob error gradients. More... | |

| vector< shared_ptr< Blob< Dtype > > > & | blobs () |

| Returns the vector of learnable parameter blobs. | |

| const LayerParameter & | layer_param () const |

| Returns the layer parameter. | |

| virtual void | ToProto (LayerParameter *param, bool write_diff=false) |

| Writes the layer parameter to a protocol buffer. | |

| Dtype | loss (const int top_index) const |

| Returns the scalar loss associated with a top blob at a given index. | |

| void | set_loss (const int top_index, const Dtype value) |

| Sets the loss associated with a top blob at a given index. | |

| virtual int | ExactNumBottomBlobs () const |

| Returns the exact number of bottom blobs required by the layer, or -1 if no exact number is required. More... | |

| virtual int | MinTopBlobs () const |

| Returns the minimum number of top blobs required by the layer, or -1 if no minimum number is required. More... | |

| virtual int | MaxTopBlobs () const |

| Returns the maximum number of top blobs required by the layer, or -1 if no maximum number is required. More... | |

| virtual bool | EqualNumBottomTopBlobs () const |

| Returns true if the layer requires an equal number of bottom and top blobs. More... | |

| virtual bool | AutoTopBlobs () const |

| Return whether "anonymous" top blobs are created automatically by the layer. More... | |

| bool | param_propagate_down (const int param_id) |

| Specifies whether the layer should compute gradients w.r.t. a parameter at a particular index given by param_id. More... | |

| void | set_param_propagate_down (const int param_id, const bool value) |

| Sets whether the layer should compute gradients w.r.t. a parameter at a particular index given by param_id. | |

Protected Member Functions | |

| virtual void | FillUnrolledNet (NetParameter *net_param) const =0 |

| Fills net_param with the recurrent network architecture. Subclasses should define this – see RNNLayer and LSTMLayer for examples. | |

| virtual void | RecurrentInputBlobNames (vector< string > *names) const =0 |

| Fills names with the names of the 0th timestep recurrent input Blob&s. Subclasses should define this – see RNNLayer and LSTMLayer for examples. | |

| virtual void | RecurrentInputShapes (vector< BlobShape > *shapes) const =0 |

| Fills shapes with the shapes of the recurrent input Blob&s. Subclasses should define this – see RNNLayer and LSTMLayer for examples. | |

| virtual void | RecurrentOutputBlobNames (vector< string > *names) const =0 |

| Fills names with the names of the Tth timestep recurrent output Blob&s. Subclasses should define this – see RNNLayer and LSTMLayer for examples. | |

| virtual void | OutputBlobNames (vector< string > *names) const =0 |

| Fills names with the names of the output blobs, concatenated across all timesteps. Should return a name for each top Blob. Subclasses should define this – see RNNLayer and LSTMLayer for examples. | |

| virtual void | Forward_cpu (const vector< Blob< Dtype > *> &bottom, const vector< Blob< Dtype > *> &top) |

| virtual void | Forward_gpu (const vector< Blob< Dtype > *> &bottom, const vector< Blob< Dtype > *> &top) |

| Using the GPU device, compute the layer output. Fall back to Forward_cpu() if unavailable. | |

| virtual void | Backward_cpu (const vector< Blob< Dtype > *> &top, const vector< bool > &propagate_down, const vector< Blob< Dtype > *> &bottom) |

| Using the CPU device, compute the gradients for any parameters and for the bottom blobs if propagate_down is true. | |

Protected Member Functions inherited from caffe::Layer< Dtype > Protected Member Functions inherited from caffe::Layer< Dtype > | |

| virtual void | Backward_gpu (const vector< Blob< Dtype > *> &top, const vector< bool > &propagate_down, const vector< Blob< Dtype > *> &bottom) |

| Using the GPU device, compute the gradients for any parameters and for the bottom blobs if propagate_down is true. Fall back to Backward_cpu() if unavailable. | |

| virtual void | CheckBlobCounts (const vector< Blob< Dtype > *> &bottom, const vector< Blob< Dtype > *> &top) |

| void | SetLossWeights (const vector< Blob< Dtype > *> &top) |

Protected Attributes | |

| shared_ptr< Net< Dtype > > | unrolled_net_ |

| A Net to implement the Recurrent functionality. | |

| int | N_ |

| The number of independent streams to process simultaneously. | |

| int | T_ |

| The number of timesteps in the layer's input, and the number of timesteps over which to backpropagate through time. | |

| bool | static_input_ |

| Whether the layer has a "static" input copied across all timesteps. | |

| int | last_layer_index_ |

| The last layer to run in the network. (Any later layers are losses added to force the recurrent net to do backprop.) | |

| bool | expose_hidden_ |

| Whether the layer's hidden state at the first and last timesteps are layer inputs and outputs, respectively. | |

| vector< Blob< Dtype > *> | recur_input_blobs_ |

| vector< Blob< Dtype > *> | recur_output_blobs_ |

| vector< Blob< Dtype > *> | output_blobs_ |

| Blob< Dtype > * | x_input_blob_ |

| Blob< Dtype > * | x_static_input_blob_ |

| Blob< Dtype > * | cont_input_blob_ |

Protected Attributes inherited from caffe::Layer< Dtype > Protected Attributes inherited from caffe::Layer< Dtype > | |

| LayerParameter | layer_param_ |

| Phase | phase_ |

| vector< shared_ptr< Blob< Dtype > > > | blobs_ |

| vector< bool > | param_propagate_down_ |

| vector< Dtype > | loss_ |

Detailed Description

template<typename Dtype>

class caffe::RecurrentLayer< Dtype >

An abstract class for implementing recurrent behavior inside of an unrolled network. This Layer type cannot be instantiated – instead, you should use one of its implementations which defines the recurrent architecture, such as RNNLayer or LSTMLayer.

Member Function Documentation

◆ AllowForceBackward()

|

inlinevirtual |

Return whether to allow force_backward for a given bottom blob index.

If AllowForceBackward(i) == false, we will ignore the force_backward setting and backpropagate to blob i only if it needs gradient information (as is done when force_backward == false).

Reimplemented from caffe::Layer< Dtype >.

◆ ExactNumTopBlobs()

|

inlinevirtual |

Returns the exact number of top blobs required by the layer, or -1 if no exact number is required.

This method should be overridden to return a non-negative value if your layer expects some exact number of top blobs.

Reimplemented from caffe::Layer< Dtype >.

◆ Forward_cpu()

|

protectedvirtual |

- Parameters

-

bottom input Blob vector (length 2-3)

the time-varying input

the time-varying input  . After the first two axes, whose dimensions must correspond to the number of timesteps

. After the first two axes, whose dimensions must correspond to the number of timesteps  and the number of independent streams

and the number of independent streams  , respectively, its dimensions may be arbitrary. Note that the ordering of dimensions –

, respectively, its dimensions may be arbitrary. Note that the ordering of dimensions –  , rather than

, rather than  – means that the

– means that the  independent input streams must be "interleaved".

independent input streams must be "interleaved". the sequence continuation indicators

the sequence continuation indicators  . These inputs should be binary (0 or 1) indicators, where

. These inputs should be binary (0 or 1) indicators, where  means that timestep

means that timestep  of stream

of stream  is the beginning of a new sequence, and hence the previous hidden state

is the beginning of a new sequence, and hence the previous hidden state  is multiplied by

is multiplied by  and has no effect on the cell's output at timestep

and has no effect on the cell's output at timestep  , and a value of

, and a value of  means that timestep

means that timestep  of stream

of stream  is a continuation from the previous timestep

is a continuation from the previous timestep  , and the previous hidden state

, and the previous hidden state  affects the updated hidden state and output.

affects the updated hidden state and output. (optional) the static (non-time-varying) input

(optional) the static (non-time-varying) input  . After the first axis, whose dimension must be the number of independent streams, its dimensions may be arbitrary. This is mathematically equivalent to using a time-varying input of

. After the first axis, whose dimension must be the number of independent streams, its dimensions may be arbitrary. This is mathematically equivalent to using a time-varying input of ![$ x'_t = [x_t; x_{static}] $](form_148.png) – i.e., tiling the static input across the

– i.e., tiling the static input across the  timesteps and concatenating with the time-varying input. Note that if this input is used, all timesteps in a single batch within a particular one of the

timesteps and concatenating with the time-varying input. Note that if this input is used, all timesteps in a single batch within a particular one of the  streams must share the same static input, even if the sequence continuation indicators suggest that difference sequences are ending and beginning within a single batch. This may require padding and/or truncation for uniform length.

streams must share the same static input, even if the sequence continuation indicators suggest that difference sequences are ending and beginning within a single batch. This may require padding and/or truncation for uniform length.

- Parameters

-

top output Blob vector (length 1)  the time-varying output

the time-varying output  , where

, where  is

is recurrent_param.num_output(). Refer to documentation for particular RecurrentLayer implementations (such as RNNLayer and LSTMLayer) for the definition of .

.

Implements caffe::Layer< Dtype >.

◆ LayerSetUp()

|

virtual |

Does layer-specific setup: your layer should implement this function as well as Reshape.

- Parameters

-

bottom the preshaped input blobs, whose data fields store the input data for this layer top the allocated but unshaped output blobs

This method should do one-time layer specific setup. This includes reading and processing relevent parameters from the layer_param_. Setting up the shapes of top blobs and internal buffers should be done in Reshape, which will be called before the forward pass to adjust the top blob sizes.

Reimplemented from caffe::Layer< Dtype >.

◆ MaxBottomBlobs()

|

inlinevirtual |

Returns the maximum number of bottom blobs required by the layer, or -1 if no maximum number is required.

This method should be overridden to return a non-negative value if your layer expects some maximum number of bottom blobs.

Reimplemented from caffe::Layer< Dtype >.

◆ MinBottomBlobs()

|

inlinevirtual |

Returns the minimum number of bottom blobs required by the layer, or -1 if no minimum number is required.

This method should be overridden to return a non-negative value if your layer expects some minimum number of bottom blobs.

Reimplemented from caffe::Layer< Dtype >.

◆ Reshape()

|

virtual |

Adjust the shapes of top blobs and internal buffers to accommodate the shapes of the bottom blobs.

- Parameters

-

bottom the input blobs, with the requested input shapes top the top blobs, which should be reshaped as needed

This method should reshape top blobs as needed according to the shapes of the bottom (input) blobs, as well as reshaping any internal buffers and making any other necessary adjustments so that the layer can accommodate the bottom blobs.

Implements caffe::Layer< Dtype >.

The documentation for this class was generated from the following files:

- include/caffe/layers/lstm_layer.hpp

- include/caffe/layers/recurrent_layer.hpp

- src/caffe/layers/recurrent_layer.cpp

1.8.13

1.8.13